Replicating a testing environment can be a near-impossible task when a lab uses custom equipment. This can be a critical task for a developer to fully understand and create a product specifically for the customer. One such task involved recreating a special networking environment that is unique to a customer’s lab.

A customer came to DornerWorks with an issue found in their lab while testing two of our 1Gb Ethernet Space Switches. The issue hindered the software processing speed of the ethernet switches to a point where the customer could not adequately continue with their testing procedures due to the heavy packet loss occurring through their network. The challenge for DornerWorks was that the lab used multiple pieces of custom equipment throughout their network that would not be accessible and that the issue appeared only after the customer ran a specific testing procedure in their lab. We needed a way to virtualize their network topology in our lab.

Each network is unique because of the different devices that receive and transmit data. These devices can perform specific tasks on a network such as streaming video, transferring files, or forwarding data to another device. In many cases, these devices are developed to operate in a specific environment and are not available for a developer to use for performing tests or debugging.

If a customer finds a specific issue in their lab, the developer may not be able to recreate the issue in their’s. The developer would instead need to find a creative way to virtualize these networking devices and mimic the traffic moving throughout all the devices.

The Layer-3 Switch developers at DornerWorks were able to do just that using a helpful piece of equipment called the ValkyrieBay from Xena Networks.

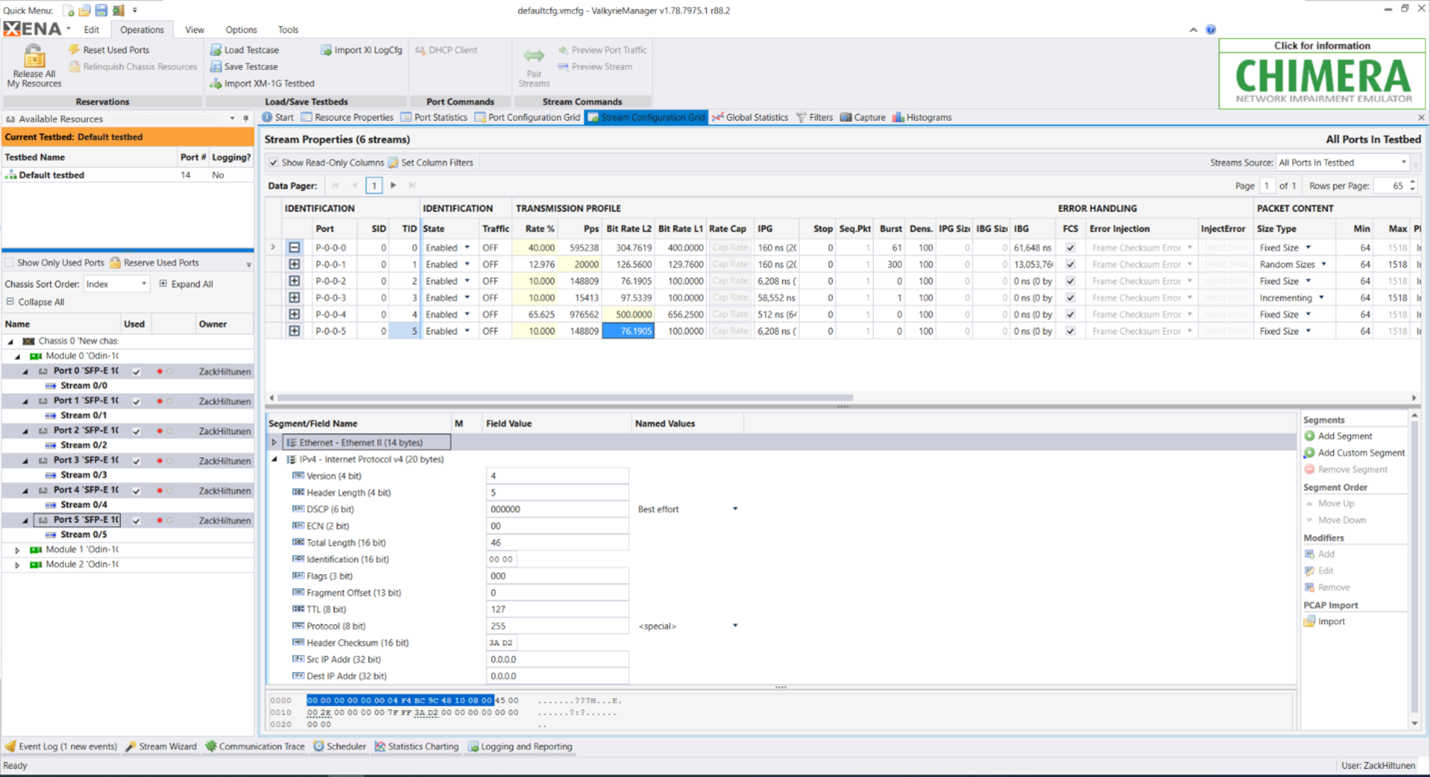

The ValkyrieBay test module is used to configure and generate Layer 2 and 3 Ethernet traffic. The ValkyrieBay chassis and test modules are controlled by the ValkyrieManager software. Through the ValkyrieManager, unique testing scenarios can be created and run to establish a performance baseline for routing ethernet packets from a network device.

One of the reasons why the engineers choose to use the ValkyrieBay to emulate the customer’s network and not another network performance tool such as Iperf was because of the way the ValkyrieManager software reports the real-time data during a test. By using the ValkyrieBay, the traffic data from each individual stream is reported and updated every second for the user. This was critical for the engineers for monitoring when the device started to fail. By keeping all the data within one window, this allowed the engineers to identify an issue and to move quickly to investigate. Using Iperf instead would have resulted in many processes and windows to continuously watch, one client and one server process for each individual stream of traffic. This adds up quick when trying to emulate multiple network devices.

The objective for the developers was to use the ValkyrieManager to simulate the unique ethernet traffic flowing through the customer’s network to find and solve a critical issue.

The first step was to emulate the different network devices connected to the ethernet switches.

The customer’s network topology included the two L3 ethernet switches, each with 6-10 connected test devices. This required using 15 ethernet ports on the ValkyrieBay, two ports to transmit data between the two switches, and two other ethernet ports running to Linux PCs for performing various tests.

The next step was to set up the streams of traffic that would move through the network.

Parsing network traffic using packet capture files (PCAP) from the customer’s lab allowed the developers to calculate the bandwidth, the various byte sizes, and the burstiness of each stream along with setting up the various protocol headers that would be needed to move packets throughout the different IP subnets being used by the network.

Not only did the network configuration require multiple subnets, but the traffic included several multicast streams. Multicast is a method for routers and switches to send packets to multiple endpoints without sending to endpoints that do not need to receive them. Using multicast is a beneficial way to avoid unnecessary congestion on a network.

The ValkyrieManager client can add multiple multicast subscriptions on each port to help emulate the customer’s network.

After setting up each stream and configuring all the necessary endpoints, the last step was to create the scenario which caused the high processor load on the ethernet switches. The scenario involved turning off one of the endpoints and turning on another. Once this scenario starts, the ethernet switches must start routing the traffic destined for the endpoint that was turned off to the newly activated endpoint.

After the endpoint swap, the customer found a higher drop rate of ethernet packets on the network. This was an important step in testing and a performance benchmark that fit the customer’s goals for the device’s normal operation.

Before release, the developers needed a way to automate a schedule for the swap. Attempting to run the test scenario manually could result in missing the window of time where the slow down occurs for debugging.

The ValkyrieManager includes a Scheduler tab which can do various tasks during a test such as enabling or disabling traffic on a port, enabling or disabling port functions such as replying to ARP requests or PING messages, and waiting a certain time period before executing the next task.

We created a schedule to run the following test steps:

By disabling ARP/PING replies instead of setting the link state down on the port, the ValkyrieManager can use the capture feature to capture incoming packets on that specific interface.

With the schedule created, the test was ready to begin. The test started transmitting the various traffic streams throughout the emulated network and as soon as the schedule swapped traffic on the ports, the slowdown occurred. After some further debugging, the issue was identified and resolved. The test was repeated multiple times and was verified to have been fixed in the developer’s and in the customer’s lab.

By configuring a unique test environment in the DornerWorks’ lab, the issue was recreated within a short period of time and the customer was able to move forward with their testing.

If your team is losing sleep over the prospect of producing reliable product testing results, call our team for a free meeting. We will collaborate with you on a plan to turn your ideas into reality.